Listening over headphones (binaural listening) is a different experience than listening to speakers (transaural listening). Here are some important perceptual differences:

The "problem" with headphone listening is that it short circuits the psychoacoustic system that helps us localize sound sources. Headphone listening produces unnatural sound conditions: if a sound is panned 100% left, we would hear all of the sound in our left ear and no sound in the right ear. This has no correspondence to the real life scenario where a physical sound source is on our left side. We hear some sound in our right ear (but still clearly perceive the sound to be on the left). Common headphone complaints are that the sound seems to come from inside the head, and the sound is harsh/fatiguing.

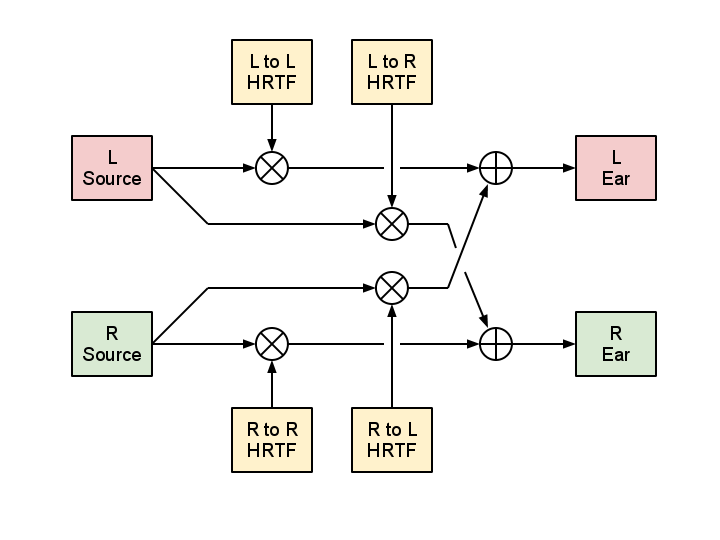

We can simulate crosstalk and the effect of the outer ear by convolving the signal with a head-related impulse response (HRIR). However, what we are simulating here is positioning the sound source at 1 meter away (depending on how the HRIR was generated) in an anechoic environment. This only gets us half way (to be generous) toward creating a free-field listening experience over headphones. To round out the effect we can convolve our signal with a BRIR (binaural room impulse response) to obtain a complete coloration - one that includes the effects of the head and outer ear, as well as the reflections and reverberant effects of a typical room.

When switching rapidly between headphones and loudspeakers, room coloration becomes evident. Room resonance puts peaks and valleys in the frequency response curve. Early reflections become apparent and they are usually not pleasant like some long-tail reverb in a gothic cathedral. The sound becomes, in a word, convoluted. Yet, open-air listening is most often preferable to headphone listening. Most would say because it sounds "more open," "spacious," and "less fatiguing," but what are the acoustic differences that explain this phenomenon? I would assert that those attributes like room modes and early reflections and the filtering of the outer ear are actually favorable effects because they provide interactivity that headphones do not. As we move around, the peaks and valleys shift, the filtering of the pinna changes. The room resonance and pinna filtering provide dynamic feedback that dramatically enhances the perception of space and distance.

In my opinion, this is the major shortcoming of HRTF filtering. While HRTF and BRIR convolution can simulate a source at an arbitrary point in space, they do not (without head tracking) put the listener in a dynamic sound environment that responds to his physical movement.

I have chosen to highlight video game music for HRTF and BRIR filtering because of its clean, persistent signal quality (free from reverb, chorus, flange, and other mastering processes) and pronounced stereo separation. These qualities make the difference after HRTF/BRIR processing clear.

Audio samples are labeled with the following suffixes: